Why Use a Structured Cabling System?

The global data center market is poised for substantial growth, projected to surge by over 6% year-over-year throughout this decade. This robust expansion is fueled by key technologies such as artificial intelligence (AI), internet streaming, and gaming, shaping the digital landscape in profound ways. Amidst this accelerating growth, data centers are evolving into sophisticated hubs, increasingly automated and equipped to handle diverse applications and a myriad of compute and storage devices, effectively managing the escalating workloads of the digital era.

In the dynamic realm of data centers, the importance of a well-designed structured cabling system cannot be overstated. Whether the device requires a copper or fiber connection, having a patch panel design makes it easier and more efficient for the myriad of changes and upgrades that can be expected in today’s fast-paced data center environments. The Telecommunications Industry Association (TIA) underscores this commitment through its TIA-942 standards, while the International Standards Organization (ISO) reinforces global compatibility with ISO/IEC 24764.

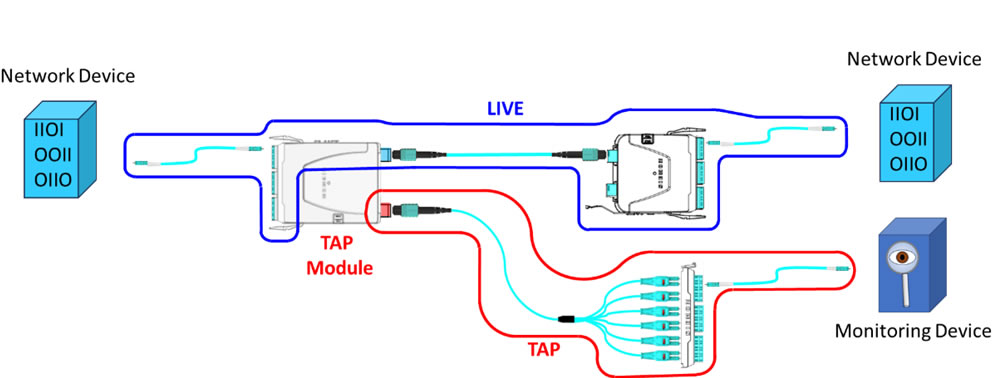

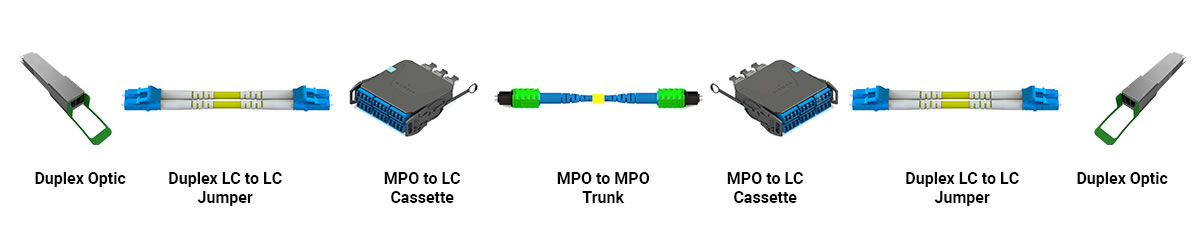

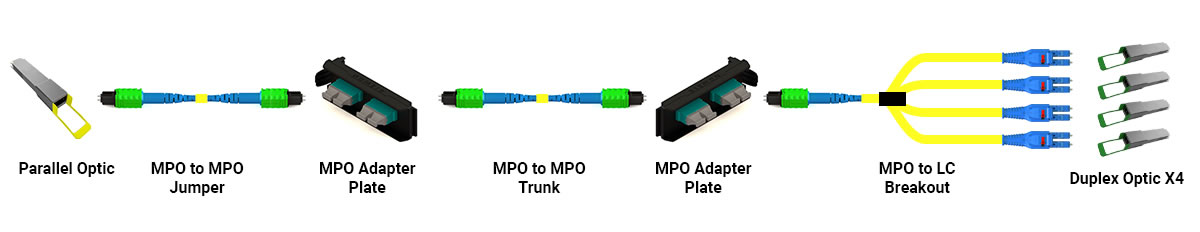

What is a structured cabling system? It’s a connectivity design that strategically places patch panels or enclosures throughout the data center space so connecting devices into the network can be accomplished with short patch cords or jumpers. The connectivity between the patch panels and enclosures is considered “structured” and remains in place for years while the end connections of patch cords and jumpers into the devices can be plugged into and out of the cabling system. For a visual representation, see Figure 1, showcasing a common fiber structured cabling channel supporting duplex LC fiber connections. It’s important to note that the optical transceivers that the compute and storage devices require dictate what type of fiber and connector is to be used. These compute and storage devices can often operate on different fiber types and connector types. The choice of fiber and connector type is best determined by the application in relation to the speed and distance of the connection. With proper planning, the structured cabling infrastructure can be specified to support multiple generations of data center applications eliminating the need to re-cable for each upgrade.

Figure 1

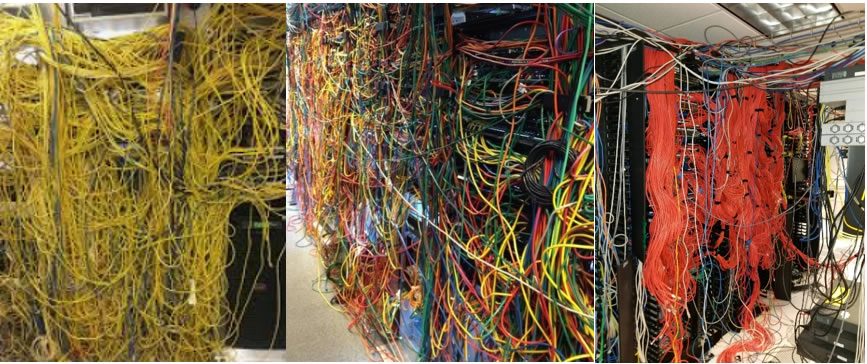

The opposite of a structured cabling system is point-to-point cabling. This connectivity method is less expensive, requires little planning and is easy to execute at the beginning. The downside to point-to-point cabling is when new devices need to be added, moved or removed from the network. Upgrades often require new cables and existing cables are often left in place creating unnecessary pathway congestion. When installing a new point-to-point cable, the technician often uses a cable that is longer than is needed to make sure there is sufficient length to connect the devices on each end. As time goes on these “extra length” cables become difficult to manage and they block air pathways in cabinets and racks that are used to cool the data center equipment. This in turn increases the amount of energy needed to cool the compute and storage devices. As illustrated in the photos below, the once neatly installed fiber cabling begins to resemble a tangled web, challenging to navigate and manage. The inefficiency of point-to-point cabling, once masked by its initial ease of execution, now takes center stage, emphasizing the importance of a well-thought-out structured cabling system.

Figure 2

A structured cabling system offers many advantages over point-to-point in the data center space. Below are seven main reasons:

- Ease of Management: Structured cabling provides a systematic and organized approach to managing cables within the data center. It allows for easy identification, tracing, and management of cables, which simplifies maintenance and troubleshooting tasks. Cable trunks or bundles reduce pathway conveyance and conduit space allowing for more cabling growth.

- Scalability: Structured cabling systems are designed to accommodate future growth and changes in technology. They can easily adapt to accommodate additional equipment, upgrades, and expansions within the data center environment without requiring significant reconfiguration or downtime. Structured cabling allows the use of multiple generations of compute and storage equipment to work together seamlessly even with different connector types.

- Reliability and Performance: A well-designed structured cabling system minimizes signal interference, crosstalk, and other issues that can degrade network performance. This ensures reliable and consistent data transmission speeds throughout the data center, which is crucial for maintaining optimal operational efficiency. A structured cable system installed by a Siemon Certified Installer has a 25-year warranty.

- Flexibility: Structured cabling systems offer flexibility in terms of supporting various types of network equipment and technologies. They can accommodate different networking standards, protocols, applications, and optical transceivers to allow data center operators to easily integrate new devices and technologies as needed. Any relocation of equipment simply requires changes to patch cords instead of having to re-install new cabling.

- Reduced Downtime: By minimizing cable clutter, simplifying cable management, and providing consistent administration, structured cabling helps reduce the risk of accidental cable disconnections and other human errors that can lead to network downtime. This helps improve the overall reliability and availability of services within the data center.

- Cost-Effectiveness: While the initial investment in structured cabling may be higher compared to traditional cabling methods, it offers long-term cost savings by reducing maintenance costs, minimizing downtime, and providing a scalable infrastructure that can adapt to changing business needs over time.

- Standards Compliance: As mentioned earlier, TIA-942 and ISO/IEC 24764 industry Standards detail best practices to ensure compatibility with a wide range of networking equipment and technologies. This helps simplify interoperability and integration efforts within the data center environment.

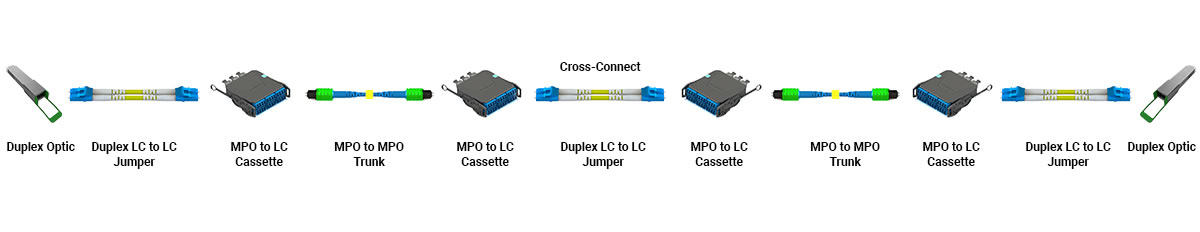

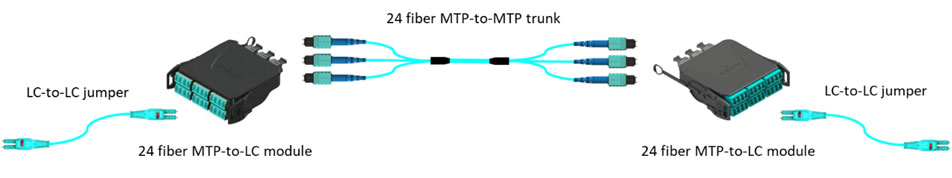

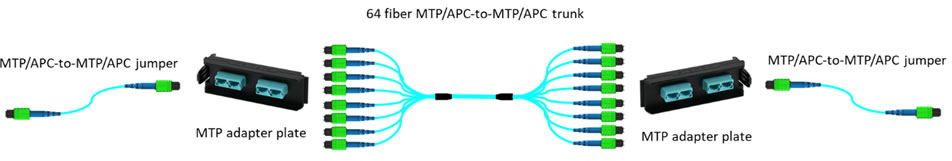

As AI computing and storage devices are installed into the data center, more fiber cabling is needed to support the higher speeds required for the graphics processing units (GPU) to function properly. A basic AI compute architecture has 128 nodes or servers with 16 spine and 32 leaf switches. The number of compute fiber strands between these devices is 8192! This number of fibers does not include the Storage, In-Band and Out-of-Band management connectivity needed for the architecture. Having a structured cabling system to support connectivity between the network racks and the servers and switches helps manage all these cables. Figure 3 provides a glimpse into the anatomy of a common AI channel using multimode fiber with angled (APC) MTP connectors that hold 8 fibers each. The MTP-to-MTP trunks can scale up in fiber counts to best match the application.

Figure 3

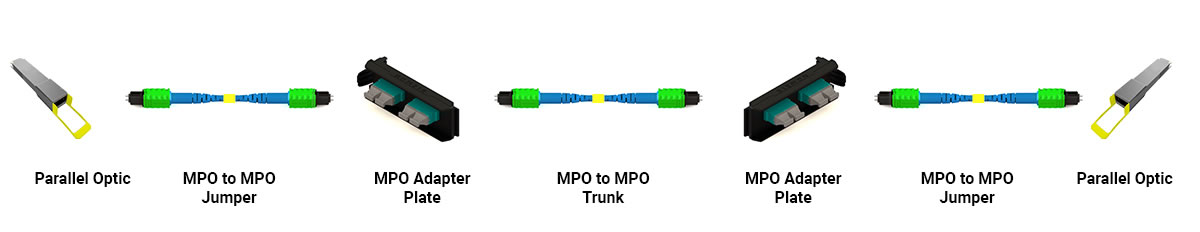

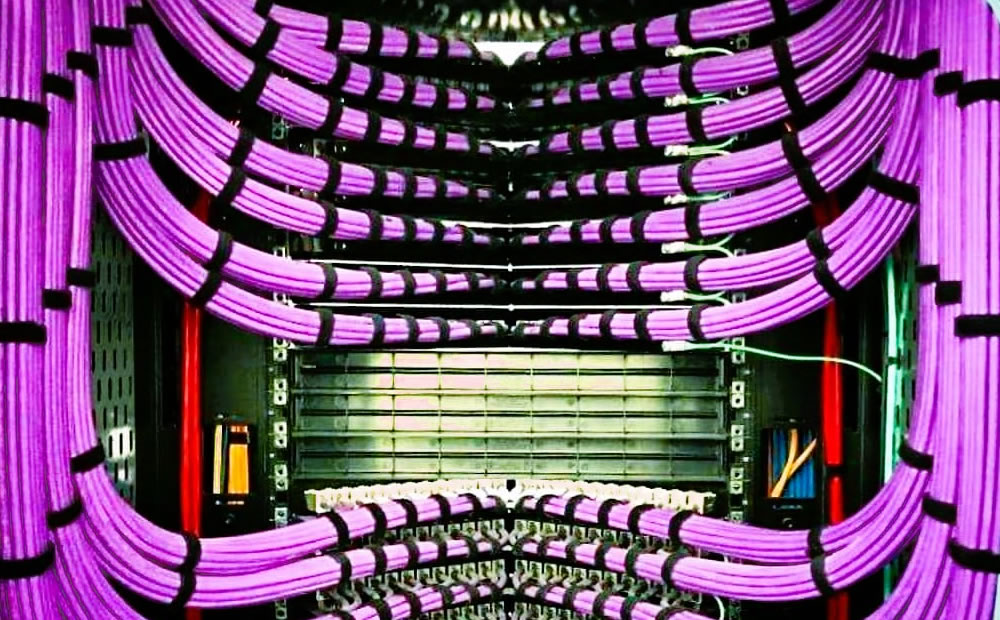

Structured copper cabling systems are also used in the data center. Copper trunks speed up cabling deployments by eliminating the time needed for connector terminations. Figure 4 illustrates a typical structured copper trunk application. It becomes evident that the strategic implementation of these trunks can significantly contribute to the overall efficiency and reliability of a data center’s cabling system.

Figure 4

In summary, in the world of data centers, machine learning, and AI, it is not just about computing power and sophisticated algorithms; it’s also about the silent workhorses behind the scenes – the well-structured fiber or copper cabling systems that enable these technological marvels to function seamlessly. So, the next time you marvel at the capabilities of data centers and AI, take a moment to appreciate the intricate dance of connectivity making it all possible.

Want to learn about Siemon AI Solutions? Visit our Generative AI webpage at www.siemon.com/ai.