Are Supply Chain Issues and Extended Fiber Cabling Lead Times Delaying Your Network and Data Center Projects?

Despite the global economy slowly starting to recover, one rather destructive issue left in the wake of the waning Covid-19 pandemic is the major disruption to the global supply chain. Previously existing inefficiencies in the supply chain have been compounded by border restrictions, labor and material shortages, skyrocketing demand following lockdowns, weather events, and geopolitical factors (just to name a few) that have left bottlenecks in every link of the supply chain – all while driving prices and lead times to all-time highs. The Institute for Supply Management’s latest survey of purchasing managers shows that the average lead time for production materials increased from 15 days to 92 days in the third quarter this year, the highest levels seen since 1987.

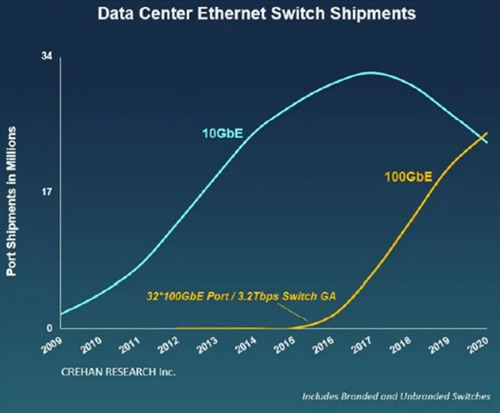

While many of the containers piling up in ports contain consumer goods, the information communications technology industry is certainly not immune to this crisis—especially considering the shortage on raw materials, chips, capacitors, and resistors used in network equipment and sub-component assemblies. With many planned upgrades put on hold at the onset of the pandemic now ready to ramp up, data center and network infrastructure owners and operators are increasingly frustrated by long lead times that hinder the very thing that Covid-19 accelerated—the hunger for more information and connectivity.

In the face of these challenges, leading industry manufacturers are innovating to maintain resiliency and meet customer expectations by diversifying suppliers and reducing reliance on overseas sources, as well as implementing advanced inventory management strategies such as increased forecasting, localizing production, and expanding distribution plans. At Siemon, we’re taking it one step further with guaranteed expedited shipping on mission critical fiber cabling solutions through our FiberNOW™ fast ship program.

Leveraging new advances in logistics and adding extensively to Siemon’s already best-in-class ISO 9001 manufacturing and warehousing capabilities, FiberNOW’s extensive list of multimode and singlemode fiber cabling and connectivity products are guaranteed to leave our facility in or less 5 days – including both standard and custom configurable options!

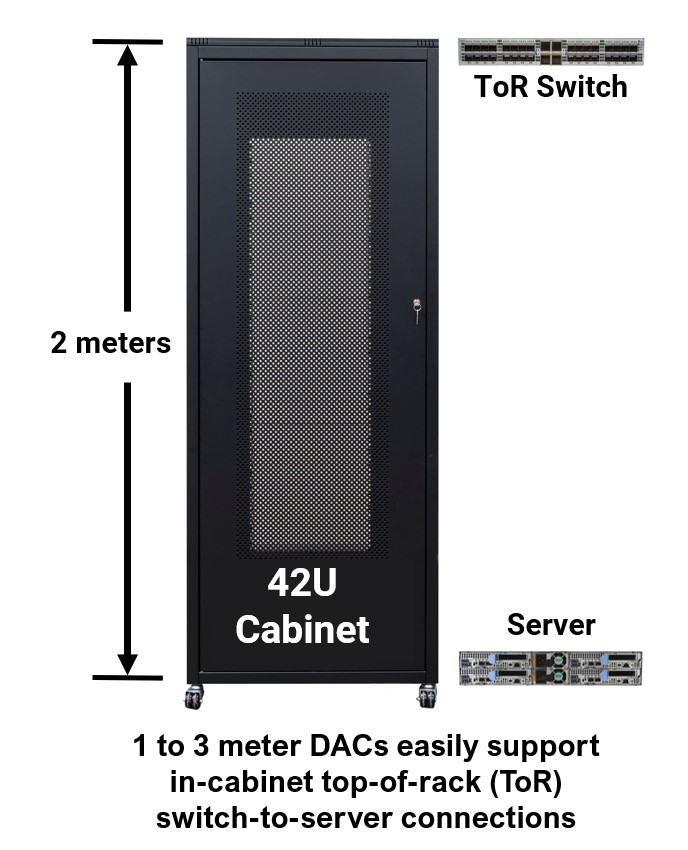

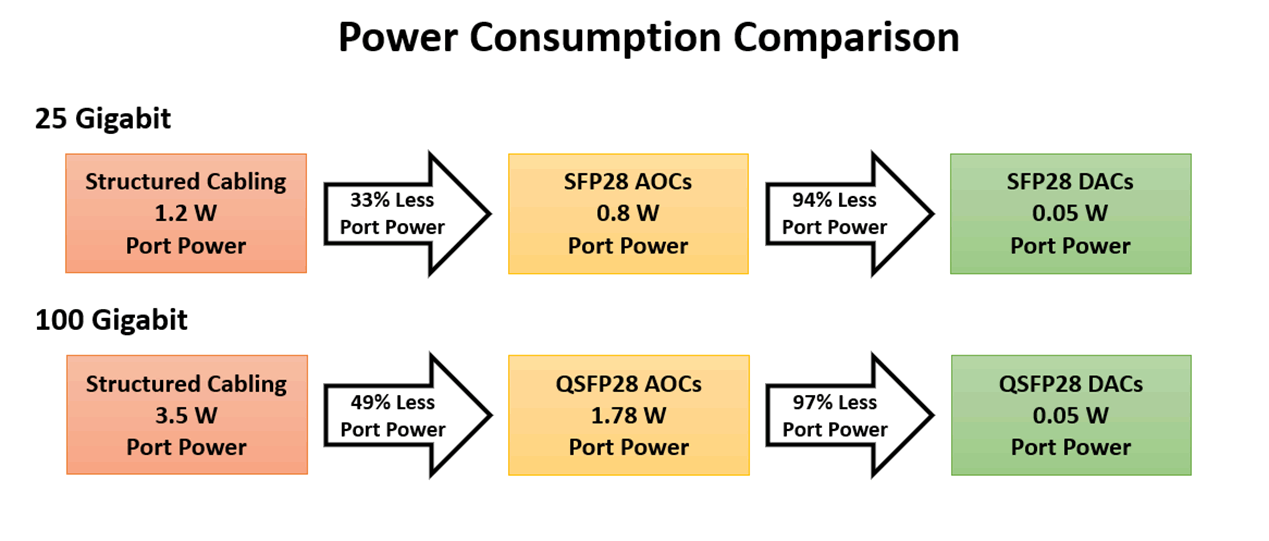

FiberNOW solutions include plug-and-play LC and MTP OM4 and OS2 jumpers and trunks, MTP-LC assemblies, cassettes, modules, and enclosures—everything today’s network data center owners and operators need to deploy 10 to 400 Gigabit switch-to-switch and switch-to-server links. The FiberNOW solution allows new services and applications to be deployed quickly to meet the ever demand.

- Innovative BladePatch® LC Jumpers available in 1,2, or 3 meter lengths in OM4 or OS2 are ideal for high-density fiber optic patching applications. Featuring the Uniclick™ revolutionary push-pull boot for easy access and removal and a smaller footprint, one-piece connector body with an integrated polarity flip, the BladePatch® patch cords enable faster and denser network deployments.

- Base 8 MTP to LC BladePatch jumpers in OM4 or OS2 offer a connectivity transition to facilitate interconnects or cross connects between active equipment.

- Base 8 MTP jumpers in OM4 or OS2 featuring 2mm diameter RazorCore™ cable to reduce pathway congestion and improve airflow.

- High-performance plug-and-play Base 8 and Base 12 MTP and LC OM4 or OS2 trunks in a variety of counts up to 144 fibers.

- High-Density 1U Fiber Connect Panel (FCP3) and 2U and 4U Rack Mount Interconnect Center (RIC3) enclosures compatible with an array of Siemon fiber Quick-Pack™ adapter plates and modules in your choice of fiber adapters and port density applications.

- High-density FCP3 LC Adapters and standard-density Quick-Pack LC Adapters in 12 or 24 fiber counts for use in FCP3 and RIC enclosures.

- OM4 and OS2 high-density PPM cassettes and standard-density PP2 LC to MTP cassettes in 12 or 24 counts for use in FCP3 and RIC enclosures.

Instead of being frustrated with supply chain issues and long lead times that are delaying your data center network upgrades and expansions, get the fiber you need now with Siemon’s FiberNOW program.

Learn more: FiberNOW™ – Fast Ship Program