Data Center Challenges: The Importance of Power, Cooling and Connectivity

Written by Dave Fredricks

The trend today for data center deployment is to build smaller spaces in more locations closer to the users to reduce latency and increase redundancy, aka Edge Data Centers. This new approach deviates away from past planning of having one or two larger sized enterprise data centers and a secondary disaster recovery (DR) site. The advantages of having more compute assets in multiple locations is obvious but managing these smaller locations can be difficult from a personnel perspective, onsite monitoring and the ability to support current and next generation computing equipment.

The big three components of designing a new data center space are Power, Cooling and Connectivity. Power requirements are addressed initially at the beginning of the process using the need of critical load (power needed at full IT load for all compute equipment) as a value of N. To simplify the number N comes from adding the power needs on a per cabinet basis and totaling all cabinets in the initial build out, plus future growth to obtain power usage or a total power requirement value. Power needs for non-IT compute equipment like cooling and lighting also need to be added. Along with the needed power for the data center space there are different redundancy options to choose from like N+1 or 2N. Once this is calculated the power design is typically static for several years depending on the addition or subtraction of computing equipment in the data center space.

Cooling is a more dynamic aspect of building and managing the data center space. The reason being is as switches, servers and other compute equipment are added or removed in day to day operations this changes the airflow pathways in the cabinets. As the airflow changes in the individual cabinets, it also changes in the entire data center space. Managing the changes is challenging but there are tools available to help. Deploying monitoring sensors throughout the data center space help the operator see in real time the shifts that are occurring and provide feedback on how best to address hot or too cold spots as well as power usage.

Traditionally the monitoring sensors were hardwired into each cabinet and at different locations in the data center space, for instance end of row, underfloor, ceiling plenum spaces and other areas that had temperature considerations. This style of environmental management falls under the category of Data Center Infrastructure Management (DCIM). DCIM is evolving as are many processes in the data center space. Monitoring can now utilize low cost thermal sensors that operate on long-term batteries and wirelessly connect back to the software that is supported with Artificial Intelligence (AI) and Machine Learning (ML). Upsite Technologies offers an AI/ML solution for this application called EkkoSense. This AI/ML technology, while still in its early growth period, offers the ability to have many more data points with inexpensive sensors or input devices providing real time information on all temperature aspects, power usage and PDU utilization of the data center space.

EkkoSense has the ability to display the information provided by the thermal sensors in formats such as 3-D visualization, or digital twin, as shown in figure #1. Other dashboard options are available to view and oversee the entire data center space or sections of the space. These dashboards can be configured to meet the operators thermal and power parameters viewed at multiple locations. The American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) sets published standards (90.4-2019, Energy Standard for Data Centers) for the data center industry that set minimum energy efficiency requirements. These requirements can be monitored through EkkoSense and corrective actions can be quickly determined to solve problems as they arise.

Figure #1

Understanding that often Edge Data Centers are un-staffed and require monitoring 24/7, having a robust platform to monitor the facility is essential. Maximizing the available cold air with proper airflow management and directing hot exhaust air for recirculation will protect the computing equipment. Keeping the computing equipment cool and reducing the cost of energy to its most efficient levels will optimize the running of the data center and reduce downtime from thermal and power failures.

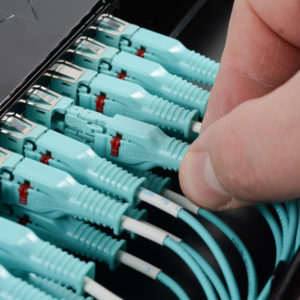

This brings us to Connectivity. Singlemode fiber enters the data center in the Entrance Room. The fiber links are provided by Internet Service Providers (ISP’s) with connectivity to the outside world. Most data centers have at least two ISP connections but often have as many as three to five. From the Entrance Room singlemode fiber runs to individual floors, zones, rooms, pods (group of data center cabinets installed in a rectangular shape for cooling purposes) or cabinets.

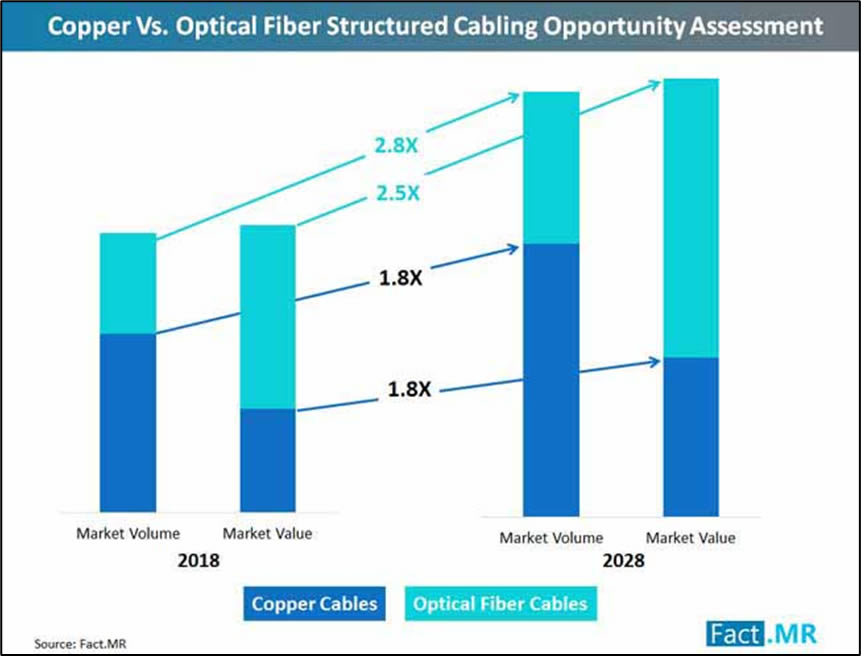

Today most new data center deployments have fiber running from cabinet to cabinet and copper connections are in-cabinet or distances less than 5 meters. Often fiber runs under 100 meters use multimode fiber instead of singlemode fiber. The reason is that the optics that plug into the computer equipment with the fiber were traditionally less expensive using multimode fiber. However, over the past 2-3 years the cost of singlemode optics has declined significantly to be near the same cost of multimode optics. This price reduction is in thanks to the large Cloud Providers using all singlemode fiber and optics in their data centers. For this reason, more data center operators are choosing to run singlemode fiber from cabinet to cabinet instead of multimode. Singlemode fiber has an advantage over multimode fiber in that it can support higher speeds, 400G+ over longer distances than multimode. It provides the user with more bandwidth which allows for more interconnects and flexibility in the structured cabling plant.

If you have questions about thermal and power monitoring, whether to deploy singlemode or multimode fiber in your data center or the latest structured cabling designs please reach out to your local Siemon RSM.